Dear Francesco and Scott, thank you very much for sitting down with us today and taking our questions. Could you tell us more about the combination of hyperspectral imaging with phasor analysis to simplify the process of getting images from a sample labeled with multiple fluorophores? As both of you are experts in these fields, maybe we can start by understanding a little bit more about both of these techniques.

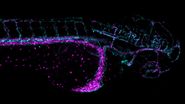

Dr. Cutrale: Hyperspectral imaging technically has been around for a while as it was initially developed for remote sensing. Airplanes flying over land, or satellites flying around the globe. When you're looking at color images they might be very different when you look at their spectral composition. Hyperspectral imaging adds a new dimension to imaging, which is the wavelength. Instead of sequentially capturing single-color images in the respective channels, with hyperspectral imaging we simultaneously capture an image that has a larger number of channels. Two decades ago, Scott already implemented this method of hyperspectral detection for microscopes, but his approach involved a lot of complex mathematics to do the unmixing, which means to separate which contributions are coming from which kind of signals from the sample. The standard algorithms were designed around the satellite and the remote sensing imaging so in this case, your light source is the sun. That is a much stronger signal than when you're doing microscopy, where you are have to deal with a very low signal to noise ratio in fluorescence. Your imaging is affected by noise, it’s affected by speed, it’s affected by a limited photon budget.

How did you overcome these microscopy-specific obstacles?

Dr. Cutrale: For you to access the information, you need to find an algorithm that is resistant and robust to these types of noises, and that is simple enough in describing not only the single pixel because it might be really affected by noise, but in understanding the complete spectral composition of the entire sample. And that's where phasor analysis comes into play. Phasor analysis has been around for decades and is already well established for fluorescence lifetime imaging, or FLIM. Leica has integrated lifetime imaging with phasor analysis in the confocal STELLARIS 8 FALCON system, and it's an amazing instrument. For lifetime imaging the phasor analysis makes sense because these are only originality signals for a quality and frequency domain. So we decided to use the phasor approach also for unmixing the hyperspectral imaging data, and it made perfect sense.

What were the challenges when adopting phasor analysis for hyperspectral unmixing?

Dr. Cutrale: You have this limited amount of signal when you want to gently image your sample. You want to be versatile in imaging and different types of samples. We started this work three years ago for hyperspectral phasors and we realized that the phasor is extremely powerful. It's really good at denoising the data, and it is really good at simplifying your analysis. But we decided to go one step further. Usually, the problem here is in order for you to use the phasor, if you'd really want to interact with it, then you need to learn about phasor analysis. And many people are hesitant to learn new things beyond the many, many things that they already have to learn, or simply they do not have the time. So what if we could take advantage of the more automated types of linear unmixing and have a more versatile and sensitive phasor approach? And that's what we did here. We merged in a hybrid version, immersed the semi-automation of the standard algorithms with the sensitivity and versatility of the phasor and integrated everything together, gaining a much higher sensitivity and a much faster algorithm for unmixing. With the current version the users don’t need to interact with the phasor at all if they don’t want to but can still take advantage of the benefits of the technique.

That sounds like a real breakthrough, congratulations Francesco! Scott, what do you think are the real-world advantages of the combination of phasor-based analysis and hypespectral data for life science investigators? Let’s say you were to explain to a cancer researcher that is using microscopy as just another tool in the lab what this technique does for him and how it works, without having to learn any algorithms, mathematics, et cetera?

Prof.Fraser: In short, it lets you do hyperspectral imaging and unmixing without being a hyperspectral imaging specialist. It’s much the same way that you can simply take a picture with your multi-camera smart phone and unmix it by only pushing the button, or at the same time you could take a picture by setting up a tripod, putting a giant camera on it, sliding a photographic plate into it and everything else that comes with this classic procedure. The latter would be the usual process with acquiring fluorescence microscopy data in an imaging facility: There's never the right filter, and if there is the right filter, somebody has put their fingerprint on it. And almost always the microscope must take multiple exposures through multiple filters, and then we register the images to get the multiple colour image. I would walk into the microscope lab to take my pictures only to find out that somebody has taken away one of the filters, put it in a different microscope, and dropped it on the floor. But the main thing is that multicolor imaging usually required collecting multiple images that were taken independently, and then using them to try to cut and paste them together, almost like you're making a mosaic of the images. What's powerful about our approach is that it doesn't require you to move filters around, it doesn't require multiple exposures that you need to make after changing the positions of the filters, and it doesn’t require knowledge of complex algorithms and mathematics. In that respect, we are using the smart phone of hyperspectral imaging. For me, that's the big difference. And the fact that I will never have to worry again that somebody has taken the filter I need for Tuesday's experiment away and put it in another microscope on Monday.

But what about the computational tools that you need after you have the spectral image of your sample, how does this part compare to linear unmixing or other unmixing techniques that are out there today?

Prof.Fraser: Of course, the obvious answer would be that this approach is much gentler to your samples, but that’s only half of the story. The typical approach has been to acquire the image, store it, sit down at the computer and analyse it. And the time difference between sitting and collecting the data and sitting and processing the data can be significant. Now, you have something that does it automatically and quickly so that you don't even notice the computation is happening. Again, like the way that a smart phone collects images from multiple camera sensors and synthesize the picture that you see, the phasor-based analysis collects and analyses the image for you. I've had tons of users and students that thought they had finished their work. And then one or two weeks later, they found the time to analyse it and discovered they didn’t have what they thought they had. It’s like using an old-school photo camera where you expose the film at the football game, and then sometime later in the photo lab you develop it just to see if you've got the right thing. And by that time the event is already over, you can't go back and take the photo, or organized another football game so you can make up for the photo you missed. With automatic phasor analysis you get the instant result from your samples -- you extract the knowledge while you are still with your specimen sitting at the microscope.